I recently migrated Listomo (my email marketing platform) from Sidekiq to Solid Queue. There are lots of posts out there showcasing how various companies migrated to Solid Queue from Sidekiq but they did not help that much, so hopefully this helps someone else.

Migration Steps

- Install Solid Queue

- Install Mission Control Jobs

- Extend Application Job

- Move the Jobs to

/app/jobs - Migrate from

perform_asynctoperform_later - Update bulk job processing

- Update custom retry logic in particular jobs

- Update Tests to use new enqueued test helpers

- Remove Redis and Sidekiq gem

- Update your Procfile and Ship It

Install Solid Queue

This one is quite self explanatory. The docs on the Solid Queue site are on point, but I’ll re-iterate here:

bundle add solid_queue

bin/rails solid_queue:install This will create a few files, namely:

config/queue.yml– The main Solid Queue configconfig/recurring.yml– Solid Queue recurring jobs config (I dont use this, so I wont be covering it, I use the whenever gem for recurring tasks)db/queue_schema.rb– The database schema file for solid queue

You’ll also need to add a queue block to your database.yml file for each environment, here’s what mine looks like:

development:

primary:

<<: *default

database: listomo_development

queue:

<<: *default

database: listomo_development_queue

migrations_paths: db/queue_migrate

production:

primary: &primary_production

<<: *default

url: <%= ENV['DATABASE_URL'] %>

queue:

<<: *primary_production

url: <%= ENV['QUEUE_DATABASE_URL'] %>

migrations_paths: db/queue_migrateI have my databases hosted with a managed provider for production, so I need to provide the URL for each database. You can have Solid Queue in the same db as your main app if you’d like, I chose to offload it because with an email marketing tool its very easy to to have insanely large bursts of jobs that need to get done (email delivery, etc) so offloading it to another DB which I can scale up/down accordingly for jobs is important for my use case.

If you’re on a managed cloud database provider then you’ll likely need to create that DB by hand via their systems and then get the connection string URL that you can put into an environment variable as I’m doing with QUEUE_DATABASE_URL.

This Database will need to be created before you can create the migration to create the tables.

I ran rails db:migrate on my production deployment and the tables were created on my db. The docs say to use rails db:prepare and that would likely work if your user has access to create DB’s etc, but I did not so I had to create my DB by hand and then run the migrations to get my production system ready.

For development, I run PostgreSQL locally, so running rails db:prepare did the job. The DB was created and migrated accordingly.

Install Mission Control Jobs

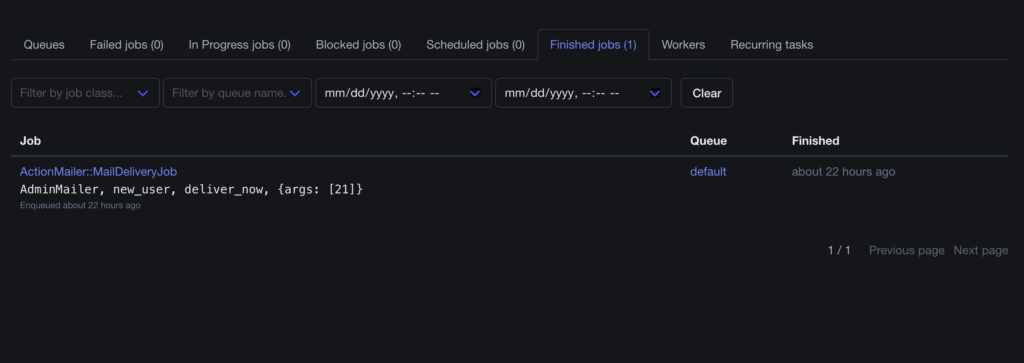

Mission Control Jobs is a simple job dashboard that gives you visibility and some UI functionality into the various background processing job in Solid Queue. You can inspect the queues, successful jobs, failed jobs, recurring tasks, etc.

It looks like this:

You’ll need to add a gem for this:

gem "mission_control-jobs"Then in your routes file you’ll want to add this:

mount MissionControl::Jobs::Engine, at: "/jobs"Please note, you will want to secure this url. The docs have some various options.

I use Jumpstart Pro, which comes preconfigured with a secure admin and the /jobs url is mounted at /admin/jobs and can only be accessed by admin users.

Extending ApplicationJob (Updating the Sidekiq Jobs)

All of my Sidekiq jobs looked like one of these two:

class DeliverBroadcastEmailJob

include Sidekiq::Job

sidekiq_options queue: :broadcast

def perform(...)

# the job

end

end

# or

class WebhookJob

include Sidekiq::Job

def perform(...)

# the job

end

endTo migrate these types of jobs I had to do the following:

- Remove

include Sidekiq::Job - Extend

ApplcationJob - Optionally update the specified queue with the ActiveJob syntax

Here’s how these same two jobs look with ActiveJob (Solid Queue in this case):

class DeliverBroadcastEmailJob < ApplicationJob

queue_as :broadcast

def perform(...)

# the job

end

end

# or

class WebhookJob < ApplicationJob

def perform(...)

# the job

end

endThat’s it. Easy peasy. Now we just need to update how the jobs get invoked.

Move Jobs to /app/jobs

By default, your Sidekiq jobs are stored in the /app/sidekiq folder. You’ll want to move all of your jobs to the ActiveJob desired location /app/jobs. This is not required, but why would you want your ActiveJobs inside of a folder called sidekiq? Seems confusing.

You’ll want to do the same thing for your tests.

Move your tests from /test/sidekiq to /test/jobs.

Migrate perform calls

When launching your jobs, you’ll need to migrate from the Sidekiq syntax to the ActiveJob syntax that Solid Queue uses. You’ll want to replace perform_async with perform_later and perform_at with .set(...).perform_later as shown here:

- WebhookJob.perform_async(webhook_event.id)

+ WebhookJob.perform_later(webhook_event.id)

# For Timed Jobs

- DeliverBroadcastEmailJob.perform_at(1.hour.from_now, broadcast_id)

+ DeliverBroadcastEmailJob.set(wait: 1.hour).perform_later(broadcast_id)You’ll want to update this in any place where you call the job, which could be in regular code or test code.

If you use perform_inline from Sidekiq, then you’ll want to migrate to perform_now:

- WebhookJob.perform_inline(webhook_event.id)

+ WebhookJob.perform_now(webhook_event.id)Update bulk job processing

Sidekiq has a nice feature that allows you to queue jobs in bulk, with the perform_bulk option and you will need to adjust your bulk enqueuing to use the ActiveJob perform_all_later syntax:

- Delivery.perform_bulk(array_of_ids)

+ jobs = contacts.ids.map { |contact_id| DeliveryJob.new(delivery.id, contact_id) }

+ ActiveJob.perform_all_later(jobs)I advise that you use this with the in_batches helper (docs) to help perform this logic in batches over large datasets.

Example:

contacts.in_batches do |contacts| # in_batches defaults to 1000 records at a time.

jobs = contacts.ids.map { |contact_id| DeliveryJob.new(delivery.id, contact_id) }

ActiveJob.perform_all_later(jobs)

endNow, when you have a large number of jobs to enqueue, it will be efficient.

Update Custom Retry Logic

Some jobs might need custom retry logic. For example, we have WebHook support in Listomo and sometimes WebHooks get rejected due to a server being down, latency issues, network problems, so we retry them. We want this to be an exponential backoff that gets longer each time. This was implemented in Sidekiq with sidekiq_retry_in, and can be re-implemented in ActiveJob with retry_on and the :polynomially_longer option (hat tip to Daniel Westendorf on X).

The :polynomially_longer option does exactly what the Sidekiq code below does. From the docs:

:polynomially_longer, which applies the wait algorithm of((executions**4) + (Kernel.rand * (executions**4) * jitter)) + 2(first wait ~3s, then ~18s, then ~83s, etc)

Here’s an example of how we did it in Sidekiq and now how we do it in ActiveJob:

class WebhookJob

include Sidekiq::Job

sidekiq_options retry: 10, dead: false

sidekiq_retry_in do |retry_count|

# Exponential backoff, with a random 30-second to 10-minute "jitter"

# added in to help spread out any webhook "bursts."

# retries for about ~3 days

jitter = rand(30.seconds..10.minutes).to_i

(retry_count**5) + jitter

end

def perform(...)

# job details

end

endHere’s how to do it in ActiveJob with Solid Queue:

class WebhookJob < ApplicationJob

# :polynomially_longer, which applies the wait algorithm of:

# ((executions**4) + (Kernel.rand * (executions**4) * jitter)) + 2 (first wait ~3s, then ~18s, then ~83s, etc)

# attempts: 11 = 10 retries + 1 initial attempt

retry_on StandardError, wait: :polynomially_longer, attempts: 11

def perform(...)

# job details

end

endNow you will have an exponential backoff retry mechanism with ActiveJob. Please note, ActiveJob does not retry jobs if they error by default, you will need to configure this yourself.

Update Tests to Use Queue Test Helpers

This one is one that seems to be missed in all the tutorials and migration walkthroughs I saw. In my codebases I verify that certain actions enqueue jobs.

In Sidekiq it was often done like this:

assert_difference -> { DeliverBroadcastEmailJob.jobs.size }, 1 do

DeliverBroadcastEmailJob.perform_async(@broadcast.id, contact.id)

endIf you attempt to keep this as is, and update the code to perform_later you’ll get this error:

NoMethodError: undefined method ‘jobs_count’ for an instance of ActiveJob::QueueAdapters::TestAdapter

When you migrate to ActiveJob and Solid Queue you’ll need to use the include ActiveJob::TestHelper‘s, these include various utilities like:

assert_enqueued_jobsassert_no_enqueued_jobsenqueued_jobs

Usage is as such:

# assert_enqueued_jobs

assert_enqueued_jobs 1, only: DoubleConfirmationDeliveryJob do

Contact.create(list: list)

end

# assert_no_enqueued_jobs

assert_no_enqueued_jobs only: DoubleConfirmationDeliveryJob do

Contact.create(list: list, skip_double_confirmation: true)

end

# enqueued_jobs

# Check to see if any jobs are queued up before you do something

assert_equal 0, enqueued_jobs.count { |job| job[:job] == DeliveryJob }Keep running your tests until they pass. Having a solid test suite was what saved me a ton of headaches in this migration. I was able to verify what broke vs what did not break very quickly.

Remove Redis and Sidekiq Gems

At this point I was able to remove the Redis and Sidekiq gems and remove the sidekiq routes from your routes file.

Routes …

- require "sidekiq/web"

- mount Sidekiq::Web => "/sidekiq"… and the gems …

bundle remove redis

bundle remove sidekiq

bundleIf you’re not using your Redis instance, you can now delete that as well. Be careful though … Action Cable is sometimes configured to use Redis. If thats the case you’ll want to migrate to Solid Cable before you can delete your Redis instance.

Once these gems were removed, I was free and clear of Sidekiq 🎉.

Update your Procfile and Ship It

If you’re using a Procfile you’ll want to update it:

web: bin/rails server

- worker: bundle exec sidekiq

+ worker: bundle exec rake solid_queue:startThis will work for any hosting provider that uses a Procfile. If you don’t use one, you’ll want to update your server config so that solid queue starts when your apps starts, other wise your background processing wont work,

Thats about it really. Now you can submit jobs, and view them in the Mission Control Jobs dashboard. You can query the jobs via SQL because they’re all in a table and you can build more tooling on top of them if you want.

You can run this on a single server with just one DB if you want or break it apart into different DBs for production (like I have).

I hope that helps. ✌️

Leave a Reply

You must be logged in to post a comment.